Dancing with Code: My Experimental Motion Chronicles

– ArtXTech

The GSAP Glory Days: Pumping out Banners like there’s no tomorrow

Back in the day, my life was all about GSAP (GreenSock) and those bloody web banners. If you know, you know—it was the era of squeezing every bit of juice out of a few kilobytes.

import { gsap } from "gsap";

const logo = document.querySelector('.logo');

const title = document.querySelector('.title');

const ctaButton = document.querySelector('.cta-button');

const tl = gsap.timeline({ repeat: -1, repeatDelay: 2 });

tl.from(logo, { duration: 0.5, opacity: 0, x: -50, ease: "power2.out" })

.from(title, { duration: 0.7, opacity: 0, y: 30, ease: "back.out(1.7)" }, "-=0.2")

.from(ctaButton, { duration: 0.5, scale: 0, ease: "elastic.out(1, 0.5)" });

Back then, this was the bread and butter. It was about precision, timing, and making things pop without breaking the browser. Proper old-school stuff.

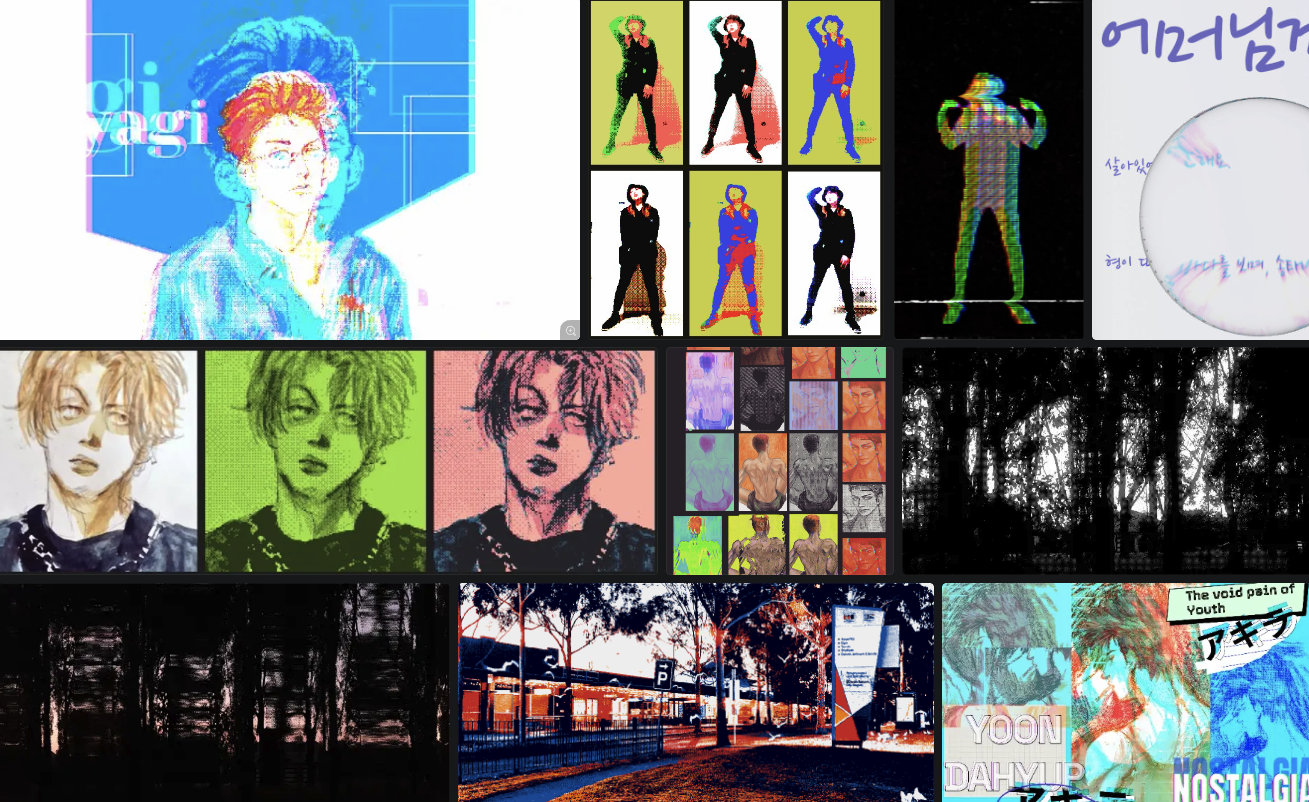

GSAP Banners I made back in the day

These animations were made purely with SpriteKit, GSAP, CSS3, and HTML5.

All because of that bloody 50kb limit for click/impression traffic costs… Far out.

The Void: Losing my touch with Web Animation

Then came a massive gap. Life happened, and I reckon I lost that “feel” for web animation. You know when you look at code and you know what it does, but the magic “the flow” is just missing? Yeah, that. I was mooning over my monitor, trying to figure out why my transitions felt so clunky.

For a heap of reasons, I started questioning the direction of my career. To top it off, a whole lot of shit hit the fan all at once, my stress levels went through the roof, and the burnout manifested physically. My health went to the pack. Eventually, in 2024, I took a break from the industry, resigned, and went back to uni to finish the Data Science Master’s degree I’d put on hold.

Entering the second half of ’24, I suddenly felt this massive sense of crisis—my creative coding and UX design system skills were getting rusty. So, I picked up GSAP, PIXI.js, and Three.js again. It had been a solid five years.

The game had changed completely. The build tools I used to flog—Gulp, Grunt, Webpack—seemed to have been overtaken by Vite. I almost missed the simple days of just chucking a CDN link in the HTML and getting on with it. I had to spend a fair chunk of time just sussing out how Vite handles its asset and code pipelines.

But then, I had a bit of a laugh. Even during the four years at my last job, I remember being flat out learning all the new tech that kept spewing out. But all those years spent chasing trends and renewing certs didn’t amount to much of an asset for me personally. My code, once strong on clean readability and consistent structure, was rusting away because I was too busy slapping things together to meet deadlines for clients and colleagues who couldn’t give a rats about ‘Best Practice.’ It’s ironic as hell—I was devoted to the company and the projects, but I didn’t feel like I leveled up at all.

It’s not like I don’t get it, though. The learning in the business requires is just chasing certs for new platforms. These certs change every time the architecture shifts, and what I knew became obsolete garbage. It’s different from updating your understanding of CSS or TypeScript ecosystems. I had to sustain this core-less learning for four and a half years, and the qualifications needed to win projects became useless after six months. The absolute worst part? I didn’t want this. I had to do it just to stay employed and pay the bills. It’d be weird if I didn’t burn out.

In the past, we crafted things cleanly just simply with HTML5, CSS3, jQuery, and Vanilla JS, but now, handling everything on top of React component architecture is the standard. Even though I’ve used React for four years, building UI style guides and implementing web animations on it felt like a whole new headache. Building a web app in React isn’t hard. Implementing a design system isn’t that tricky either. But implementing frame-by-frame animation without any lag? That’s a different beast. In an ecosystem like React with its strong implicit rules, debugging visuals for web rendering becomes a nightmare. You can’t debug animation just by checking data or type passing. More than half the time, the console won’t even spit out an error log.

Taking a crack at GSAP and WebGL again, this time with React and TypeScript

// React와 GSAP를 함께 사용하기 위한 useGSAP 훅 예시

import { useGSAP } from '@gsap/react';

import { useRef } from 'react';

function MyComponent() {

const container = useRef();

useGSAP(() => {

// useGSAP 훅을 사용하면 컴포넌트 라이프사이클에 맞춰

// GSAP 애니메이션을 안전하게 생성하고 정리할 수 있습니다.

gsap.to(".box", { rotation: "+=360", duration: 2 });

}, { scope: container }); // scope를 지정하여 해당 DOM 내에서만 셀렉터가 동작하도록 제한

return (

<div ref={container}>

<div className="box">Hello</div>

</div>

);

}

When animation glitches happen, debugging in a pure JS environment is already a pain, but on top of React and Vite, figuring out exactly where the issue lies is infinitely harder. It’s because so many libraries are tangled together. Us frontend devs, why do we always sign up for this hard yakka? Makes you want to have a good cry… bloody hell.

But, by smashing through problems one by one, I started to get my feel back. I even stepped into the world of Shaders, something I’d never touched before.

## The Unexpected Connection: Science X Tech X Engineering X Art X Math

In the industry, I was often drained by repeating tasks that felt meaningless. But now, I’ve regained the joy of drawing a clear blueprint of exactly what I want to make and writing the code to realize it.

As I started getting the hang of implementing graphics art with code and experimenting, I tried out more diverse effects.

Then, while using Python for image analysis for a uni assignment, I had a thought: ‘Couldn’t I implement visual special effects with code?’ I was actively escaping reality by treating my Data Science studies—which I feared might be useless for returning to the field—as fundamental studies for my ‘fangirling’ hobbies. Thanks to that, I managed to keep my bum on the seat through boring studies and got mostly A’s in assignment-based classes.

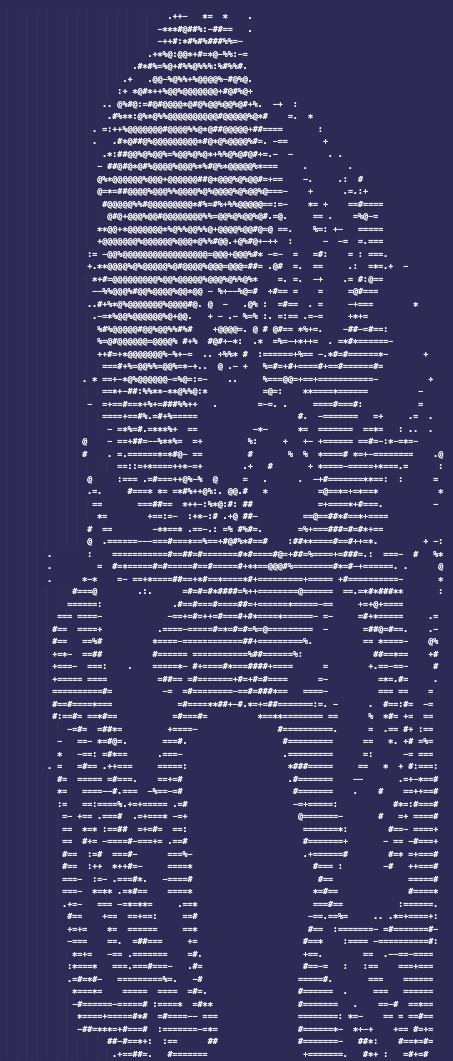

Out of nostalgia for Game Boys or DOS games from my childhood, I also worked on converting my drawings into ASCII art.

Found out later that GitHub is chock-a-block with code that converts images to ASCII, which felt a bit hollow, but hey, chalk it up to experience.

graph TD

A[이미지 입력] --> B{이미지 처리};

B --> C[그레이스케일 변환];

C --> D[리사이징];

D --> E{픽셀 밝기 분석};

E --> F[아스키 문자에 매핑];

F --> G[아스키 아트 출력];

Events that Sparked the Creative Fire - Kinetic-graphy born of Rage

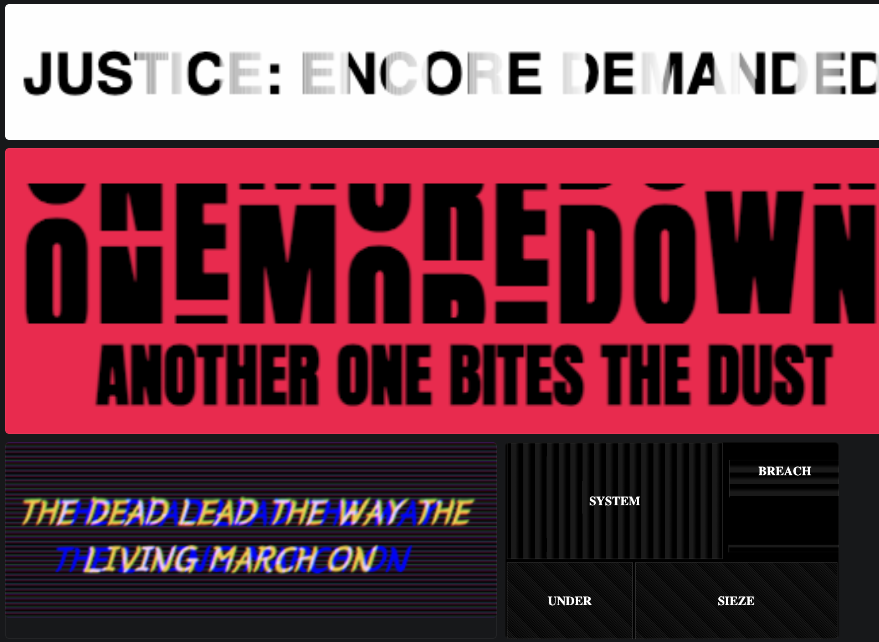

Early December brought shocking news from back home—‘Martial Law.’ As if a ‘Pig Dictatorship’ wasn’t enough, now Martial Law? What is happening to a perfectly fine country? I was seething with rage, but being overseas, there wasn’t much I could do. Even though I am Australian by Citizenship and document-wise I am foreigner in South Korea; But hey - that’s where I grew up and my cultural and spiritual essence was formulated.

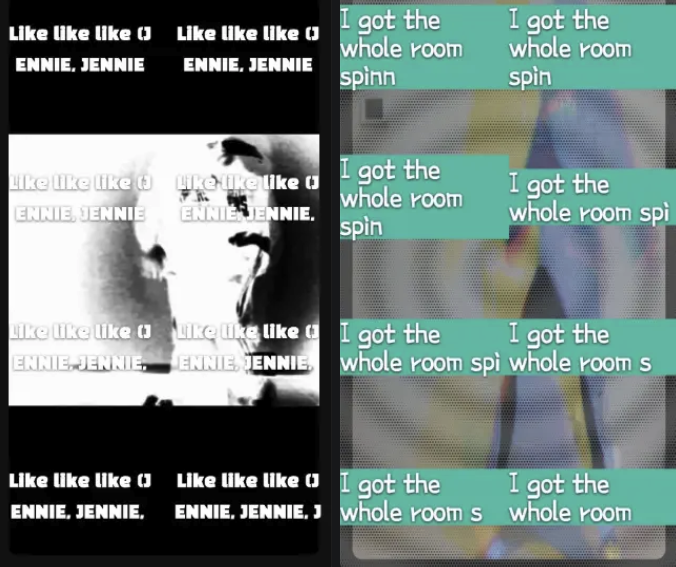

To vent this frustration, I made a Kinetic Typography animation. Instead of complex tools, I chose React and CSS3 to express my emotions in the quickest, most minimal way possible.

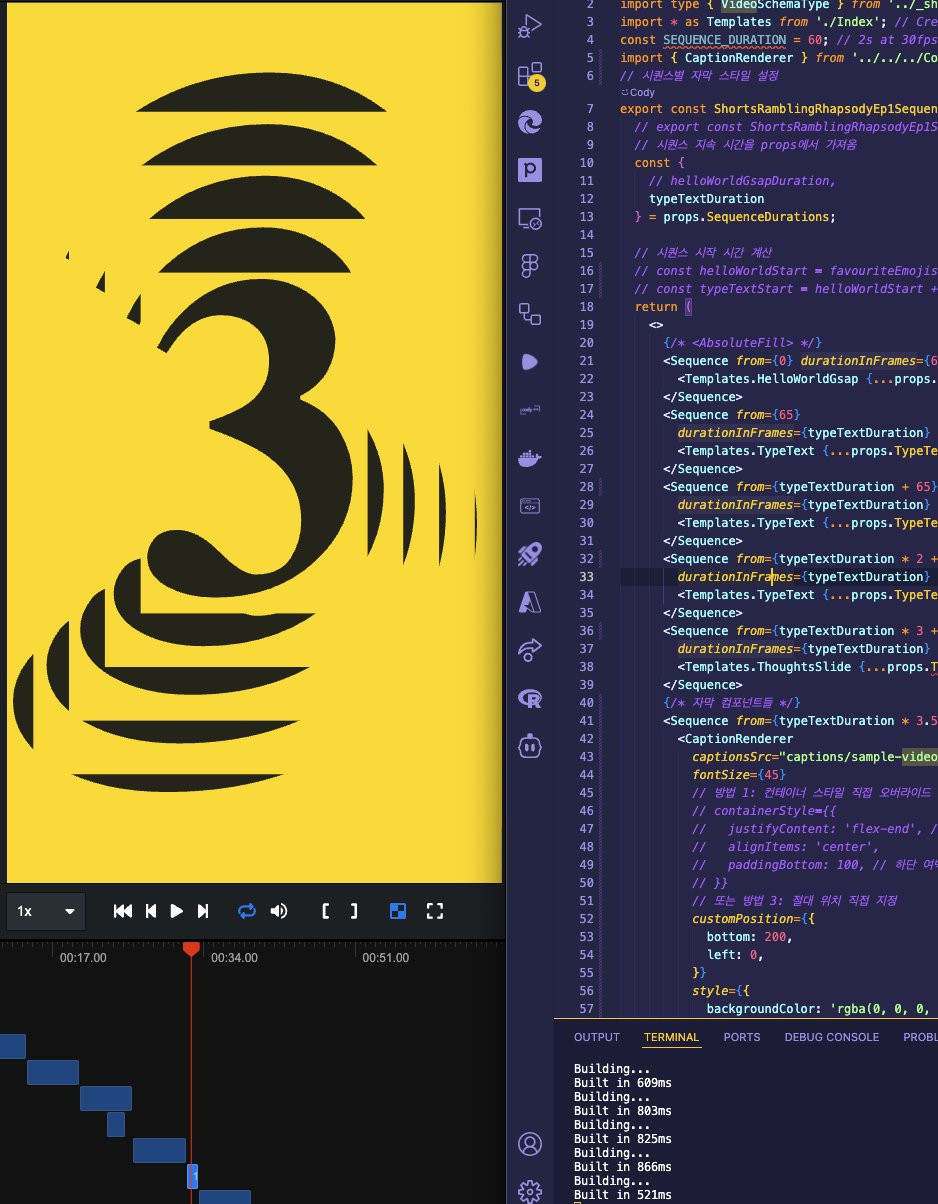

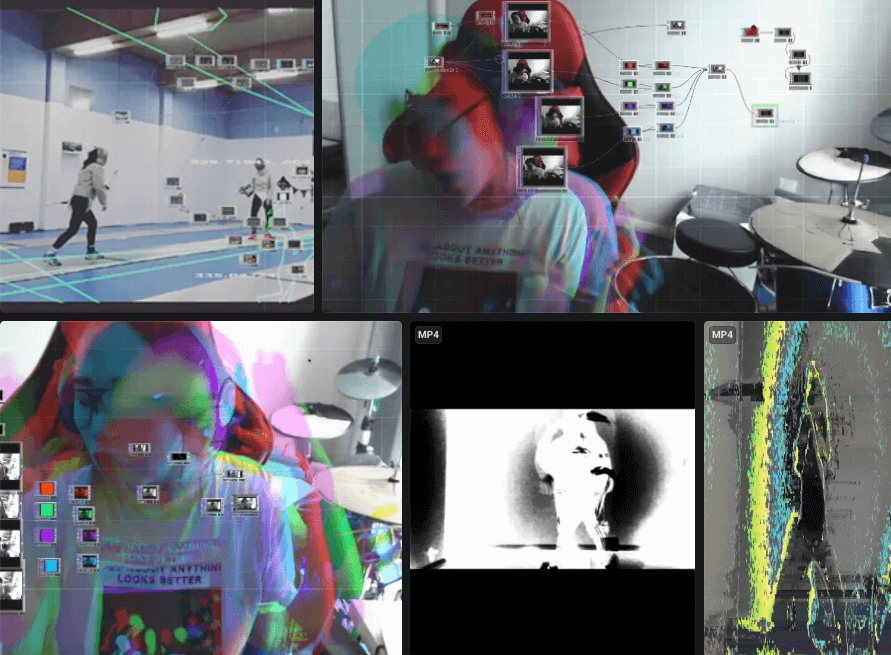

The anger didn’t subside easily. While hitting walls with sound work and tough uni classes, I stumbled across a framework called Remotion. It’s a motion graphics library based on React that lets you create videos programmatically.

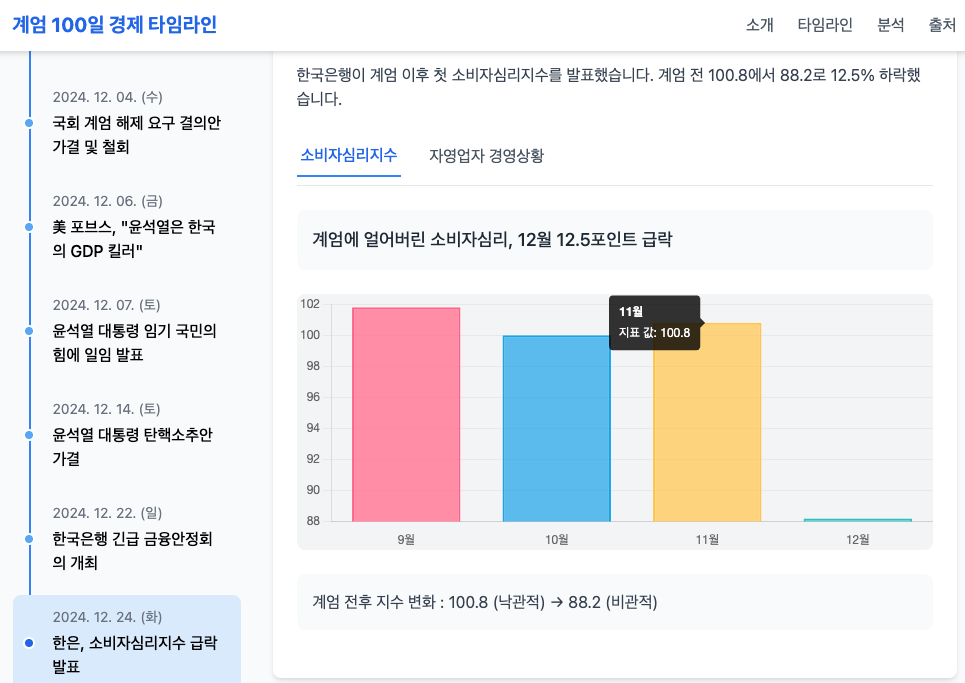

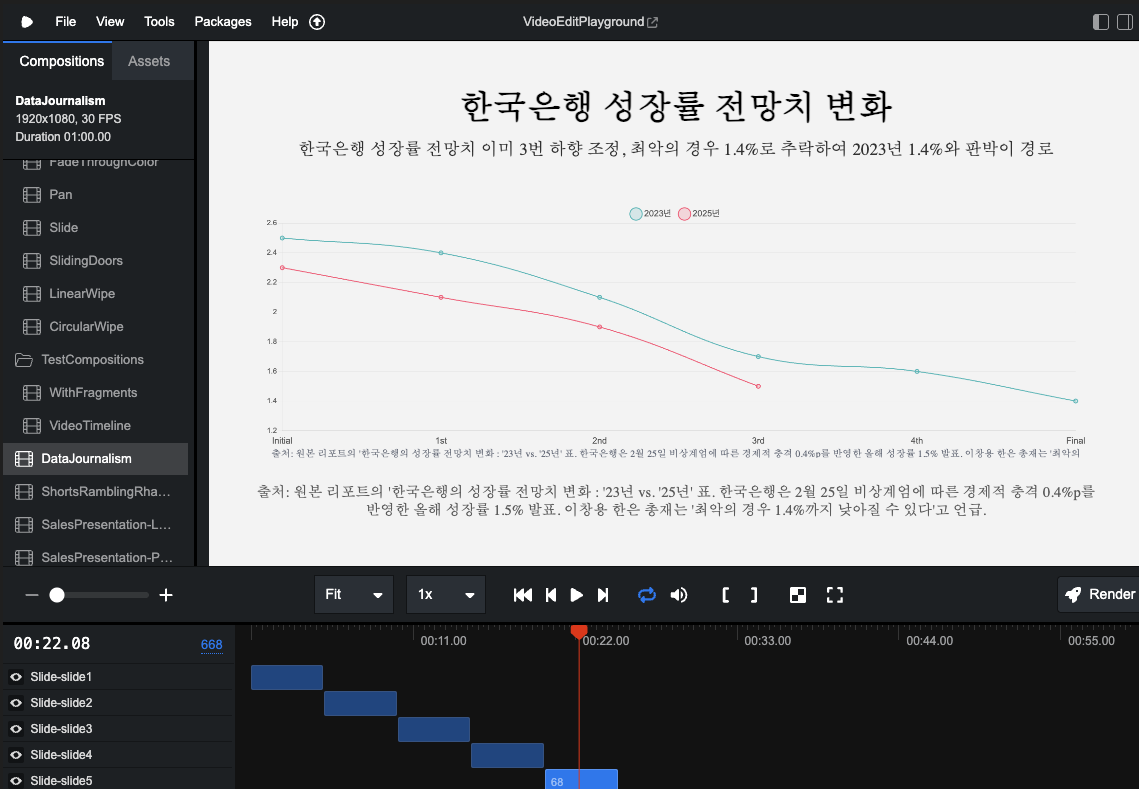

Remotion isn’t an intuitive GUI tool like After Effects or DaVinci Resolve, but the fact that it could output React components as video opened up new possibilities. Especially after the Martial Law incident, I realized I could import the chart components I made for visualizing economic indicators into Remotion and turn them into videos.

Through this period, I sussed out my own prototyping workflow.

graph TD

A[1 아이디어 구상] --> B(2 피그마: 이미지/디자인);

B --> C(3 필모라: 간단한 모션 아이디어 스케치);

C --> D(4 Jitter/Cavalry: 웹 기반 툴로 프로토타입 구현);

D --> E(5 Remotion: 코드로 최종 모션 구현);

Tools like Jitter were super useful for making simple animation prototypes quickly and cheaply. Through this workflow, I could systematize the process of solidifying ideas and implementing them technically.

The fun thing about using Remotion is the possibility of borrowing UI components I developed for Web Apps for motion video.

For example, take a look at the Timeline Data Visualisation report page I developed in April.

Here’s how selected charts from this webpage look imported into Remotion with slight tweaks (since the logic frameworks differ slightly).

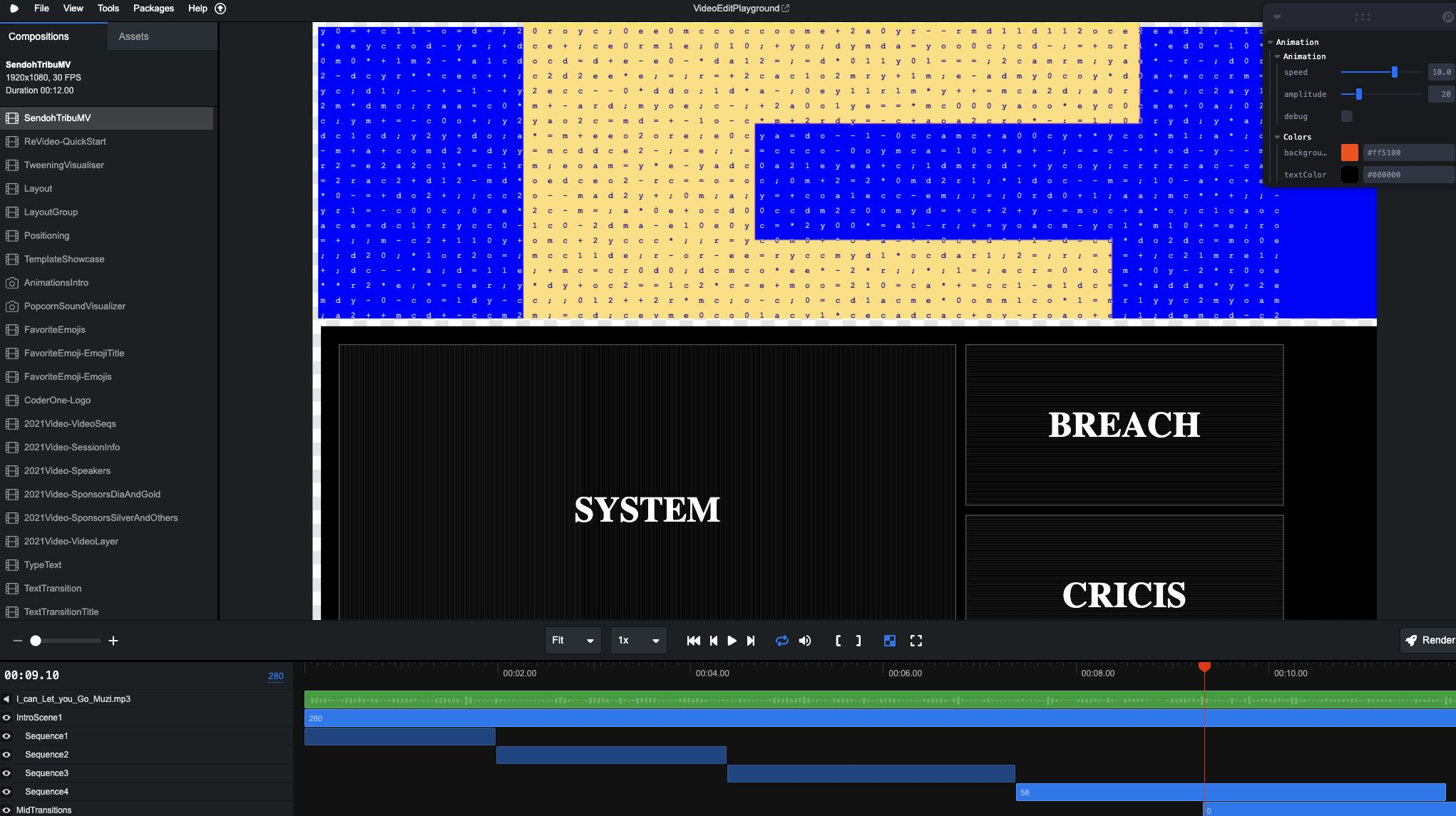

And this is a scene where I’m moving parts of a Kinetic sketch from early 2025 into a Remotion-based GSAP codebase. Like this, sketch components initially built for UI can be transformed into motion design components with just a bit of tweaking. Vice-versa, elements built for motion design can be transformed for use in other website UIs. This autonomy to cross the boundaries of presentation layers is the real benefit of working with Remotion.

The Convergence of Tech and Visual Art in Full Swing

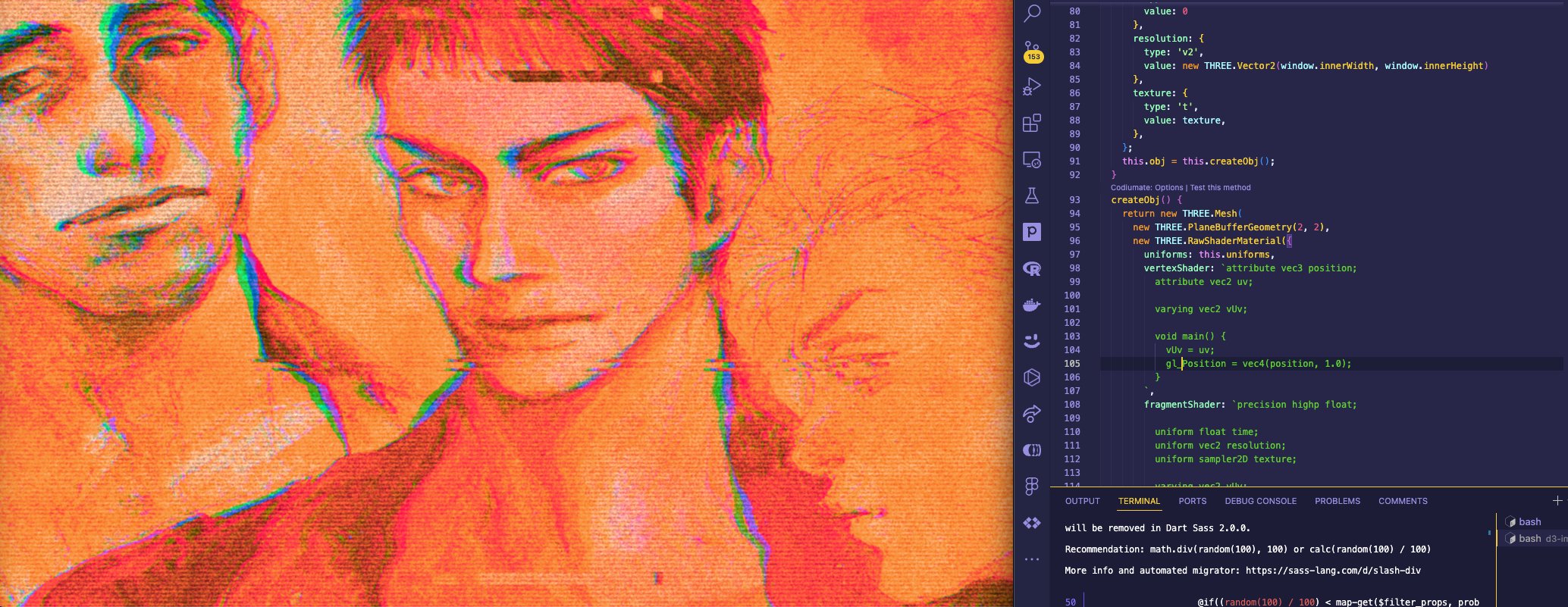

I started hooking Shaders into Remotion properly, experimenting with subculture aesthetics like glitch, break, neo-y2k, and vaporwave. Staying true to the Acid/Antiperfect design identity, I ignored grids and tested Dithering algorithms across various tools like TouchDesigner, Figma, and Remotion.

I converted displacement and chromatic aberration effects prototyped in TouchDesigner into GLSL code. By controlling this shader with GSAP timing, I succeeded in implementing a horizontal scroll animation in Remotion.

TouchDesigner is a pretty incredible program. The community is active, and if you have a feel for Blender and Shaders, you can write some GLSL and import it as an effect. TouchDesigner seems best for creating short clips with effects or implementing repeating loops for VJing visuals via a beam projector.

However, since my work often contains messages with a narrative arc and visual implications, I couldn’t work solely in TouchDesigner. Still, it’s the absolute best tool for creating prototype effect images or colorful, sensory visuals, making it optimal for creating footage for video editing.

So, I adopted a method of creating initial content in TouchDesigner, importing it into Remotion, and layering typing components on top. I imported a dance cover video of Jennie’s ‘Like Jennie,’ overlaid with images I tinkered with in TouchDesigner, into Remotion and applied shaders. Then, I put typography on the top layer to add a typing effect.

And this typing work later became the foundation for producing experimental episodes for a short talk show podcast in collaboration with TTS Gemini.

// 간단한 색수차 효과 GLSL 프래그먼트 쉐이더 예시

#version 300 es

precision mediump float;

uniform sampler2D u_texture;

uniform float u_displacement;

in vec2 v_texCoord;

out vec4 outColor;

void main() {

// 텍스처의 R, G, B 채널을 약간씩 다른 좌표에서 샘플링

float r = texture(u_texture, v_texCoord - vec2(u_displacement, 0.0)).r;

float g = texture(u_texture, v_texCoord).g;

float b = texture(u_texture, v_texCoord + vec2(u_displacement, 0.0)).b;

outColor = vec4(r, g, b, 1.0);

}

Then, from June to August, I took summer semester classes at uni and continued experiments applying various effects like dithering, halftone, and glass fx to illustrations. I also attempted to create podcasts by receiving audio via Gemini TTS and outputting YouTube podcasts via code. During this time, there was a blessing in disguise where math proof assignments helped me understand the principles of shader implementation. I realized that statistical models like FFT, eigenvectors, and PCA could be usefully applied to artistic expression.

Ironically, the math knowledge I learned in grad school—which I wandered into after hitting a growth ceiling in the field—helped massively in constructing Generative Art and VFX GLSL Shaders.

I’m reminded again of the philosophy I’ve always stated since I was a kid: Science, Math, Engineering, Tech, and Art are not separate fields at all. No one believes me when I say this, though. But who cares? My work proves it.

After the summer semester, I mobilized all the skills and aesthetics I’d built up to produce a remix music video. I packed in all the subculture mise-en-scène I loved: 80s psychedelic, 90s lo-fi, 2020s hyperpop, y2k glitchcore.

Once I settled on the imagery and aesthetic direction, I felt relieved. Now, I just have to keep chipping away at visual work with this mindset. I used a lot of Shaders and Remotion for that video visual, but solving every part of the video with just those two had efficiency issues. Sometimes it’s just heaps easier to use a Video Editor, and when linking WebGL and Remotion, processing nested shaders on the Canvas API is tough, so it was often better to just code the shader directly, make it an FX plugin for the Video Editor, and import it. Still, being able to use code and shaders myself for even partial work brings way more expandability than just brute-forcing everything with Motion Graphics tools.

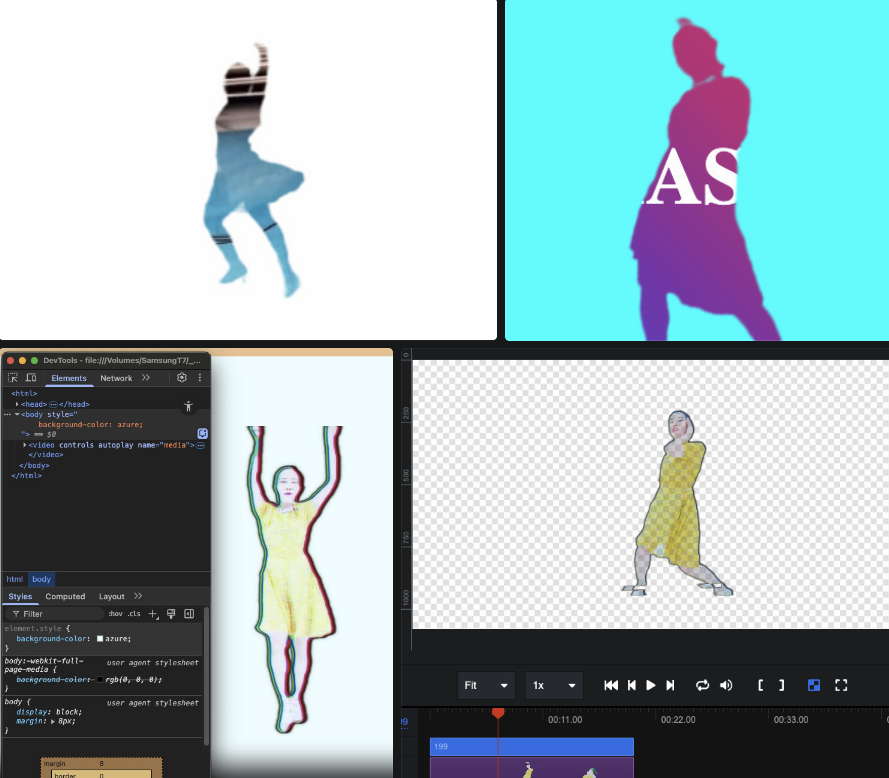

While working on the remix MV for Kim Wan-sun’s ‘Yellow’, I succeeded in masking and extracting specific parts of the video using Remotion’s Alpha Channel feature.

// Remotion에서 알파 채널 비디오를 사용하는 예시

import { OffthreadVideo, staticFile } from 'remotion';

export const MyComposition = () => {

// 알파 채널이 포함된 WebM 비디오 파일

const videoWithAlpha = staticFile('/videos/dancing-figure.webm');

return (

<div style={{ backgroundColor: 'blue' }}>

{/* 배경 위에 알파 채널 비디오를 렌더링 */}

<OffthreadVideo src={videoWithAlpha} />

</div>

);

};

Doing this hard yakka, I did wonder, ‘Would it have been better to just learn DaVinci Resolve?’ But achieving this with only HTML5 Canvas and web tech gave me a huge sense of achievement (honestly, the pain of learning multiple editor tools vs pulling your hair out debugging code is pretty much six of one, half a dozen of the other).

Wrapping Up - Blurring the Lines Between Web and Video

The journey over the past year has brought me a deep understanding of the video rendering process via web engines, beyond just exploring web animation tech. The component-based design systems and code accumulated during this process have now become powerful weapons not just for motion graphics video production, but also for creating interactive webpages that dramatically emphasize aesthetics.

Conversely, it’s become possible to transform components made for Web UI into video content (vice-versa). I actually converted quite a few Hero banner animation components from webpages into video designs.

Now, for me, the boundary between web and video is meaningless. Code is the canvas, and the browser is a new form of editing tool. I’m glaring at my VSC editor every day, coming up with ideas on what new creations I can make by crossing between these two worlds.